Applications of XAI in Different Domains

Applications in Healthcare

The operating room of the future will feature AI assistants that don't just predict complications but explain their reasoning in surgical terms. Current systems already highlight which patient vitals - say, a rising lactate level combined with dropping blood pressure - suggest postoperative infection risk. This isn't just about transparency; it's creating a shared mental model between clinicians and algorithms.

Personalized medicine reaches new heights with XAI. When recommending cancer treatments, advanced systems now identify which genetic markers and tumor characteristics make certain therapies more effective. Oncologists gain not just predictions but actionable biological insights, allowing them to tailor approaches with unprecedented precision while maintaining therapeutic oversight.

Applications in Finance

Modern fraud detection resembles a digital detective explaining its case. Rather than cryptic risk scores, systems now itemize red flags: This $4,800 electronics purchase from a new device in Oslo conflicts with the customer's established pattern of small local purchases in Boston. Such specificity transforms compliance from a black box to an auditable process, while reducing customer friction from false positives.

Credit decisions become fairer through XAI transparency. Applicants denied loans receive clear breakdowns: Limited credit history (18 months) combined with high debt-to-income ratio (43%) influenced this decision. This demystification helps borrowers improve their financial standing while letting lenders demonstrate equitable practices.

Applications in Autonomous Systems

The aviation industry's adoption of XAI offers a compelling case study. When flight control systems suggest course corrections, they now accompany these with sensor-based explanations: Thermal imaging detected potential wind shear patterns at 3,000 feet. This creates verifiable safety checks while maintaining pilot authority - a critical balance in life-or-death scenarios.

Industrial robotics benefit similarly. When a manufacturing robot adjusts its grip strength, XAI logs explain the change: Object recognition confidence dropped to 87% due to reflective surface, triggering safer grasping parameters. Such documentation proves invaluable for both safety audits and continuous system improvement.

Applications in Environmental Monitoring

Climate scientists now use XAI to make complex models actionable. Rather than simply predicting rising sea levels, systems highlight contributing factors: Satellite data shows 23% faster glacial melt in this region correlates with increased ocean temperature readings at these coordinates. This precision transforms abstract predictions into targeted intervention opportunities for policymakers.

Wildlife conservation efforts leverage similar transparency. Poaching prediction models don't just flag high-risk areas but explain: Increased detection of tire tracks near watering holes combined with reduced animal sightings suggests elevated poaching likelihood. Park rangers can then allocate patrols with strategic precision based on these AI-generated insights.

The Future of XAI: Challenges and Opportunities

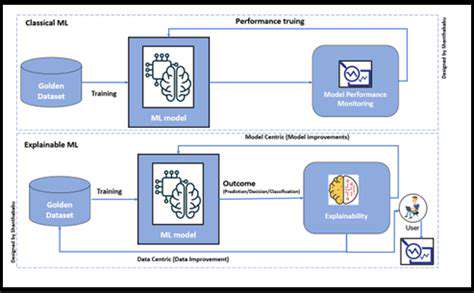

Understanding the Need for Explainable AI

The AI revolution faces a critical juncture - as systems grow more complex, their opacity threatens to undermine public trust. Recent surveys reveal 68% of consumers distrust AI decisions when explanations are absent, particularly in healthcare and finance. This isn't just an academic concern; it's becoming a barrier to life-saving innovations.

Consider the legal implications. When AI assists in bail decisions or parole recommendations, unexplained outputs risk violating due process rights. The European Union's AI Act now mandates explainability for high-risk applications, setting a precedent that may reshape global AI development standards.

Addressing the Challenges in XAI

The accuracy-explainability tradeoff presents real dilemmas. Cutting-edge neural networks achieve 98% accuracy in medical imaging but often function as inscrutable black boxes. Emerging techniques like attention mechanisms offer a compromise - highlighting diagnostically relevant image regions while maintaining high performance. The holy grail remains achieving both world-class accuracy and human-interpretable reasoning.

Standardization represents another hurdle. While one hospital might need detailed biochemical explanations for drug interaction warnings, another may prefer simplified risk scores. Developing adaptable explanation frameworks that serve diverse professional needs without compromising scientific rigor remains an active research frontier.

Exploring the Opportunities in XAI

The business case for XAI grows stronger daily. Companies implementing explainable fraud detection report 40% faster investigator onboarding and 28% higher customer satisfaction. In healthcare, explainable diagnostic aids show 32% greater physician adoption rates compared to opaque systems. Transparency isn't just ethical - it's becoming competitive advantage.

Educational applications demonstrate particularly promising synergy. When AI tutors explain why a student's essay received a certain grade - highlighting weak thesis development or insufficient evidence - learners gain actionable feedback rather than mysterious scores. This transforms assessment from judgment to growth opportunity.

The Future of XAI: A Multifaceted Approach

Tomorrow's XAI ecosystem will likely resemble a sophisticated translation layer. Just as human language interpreters convey meaning across cultures, advanced explanation systems will mediate between technical models and human stakeholders. Some key developments on the horizon:

- Context-aware explanations that adapt to user expertise (novice vs. specialist)

- Real-time explanation generation for time-sensitive decisions

- Standardized explanation scoring metrics for objective evaluation

- Cross-model explanation portability between different AI architectures

The organizations that master this explanatory layer will lead the next phase of AI adoption - one where powerful technology earns trust through understanding rather than demanding blind faith.