The Importance of Explainable AI (XAI)

Understanding Explainable AI

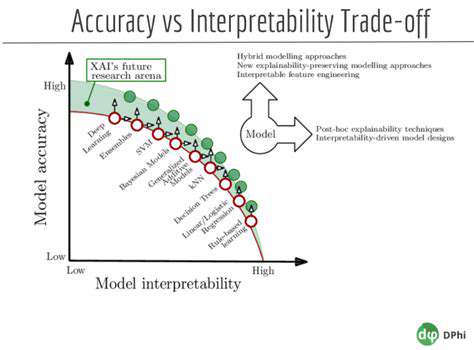

The field of Explainable AI (XAI) has gained significant traction as organizations seek to demystify the decision-making processes of artificial intelligence systems. Unlike traditional black box models, XAI provides clear, human-understandable explanations for AI-driven conclusions. This transparency becomes particularly vital when AI is deployed in sensitive areas like medical diagnosis or financial risk assessment, where accountability matters.

When users can trace how an AI model reaches its conclusions, they're better equipped to verify accuracy and spot potential flaws in the reasoning. This visibility transforms AI from an oracle into a collaborative tool that teams can refine and improve over time.

Transparency in AI Systems

True AI transparency requires more than just showing inputs and outputs - it demands a clear view of the reasoning chain. Modern XAI techniques visualize decision pathways, highlight influential data points, and sometimes even generate plain-language explanations. These features don't just satisfy curiosity; they create the foundation for regulatory compliance and ethical deployment.

Benefits of Explainable AI

Organizations implementing XAI report multiple operational advantages. The ability to audit AI decision trails helps teams uncover hidden biases that might otherwise skew results, particularly when working with imperfect training data. This becomes crucial when fairness considerations impact lives, such as in hiring algorithms or loan approvals.

Additionally, explainable systems enable continuous monitoring, allowing engineers to identify performance drift or emerging issues before they create major problems. The result? More resilient AI deployments that maintain accuracy over time.

Applications of Explainable AI

XAI's real-world impact spans numerous sectors. Medical teams use interpretable diagnostic tools to validate AI suggestions against clinical knowledge. Banks deploy transparent fraud detection systems that compliance officers can thoroughly vet. The technology's adaptability means nearly any industry can benefit - from manufacturing quality control to personalized education platforms that adapt to individual learning styles.

Ethical Considerations in XAI

As XAI matures, developers face important design choices about explanation depth and presentation. Effective explanations must balance technical accuracy with audience comprehension - a medical AI might show different details to researchers versus clinicians. There's also the challenge of ensuring explanations themselves don't introduce new biases through oversimplification.

The Path Forward for Explainable AI

Current research pushes XAI capabilities in exciting directions. New visualization techniques transform complex model behaviors into intuitive dashboards, while advances in natural language generation allow AI to describe its reasoning in context-appropriate ways. These innovations will likely accelerate as regulatory frameworks increasingly mandate AI transparency across sectors.

Real-World Implementations of Interpretable AI

Healthcare Transformation

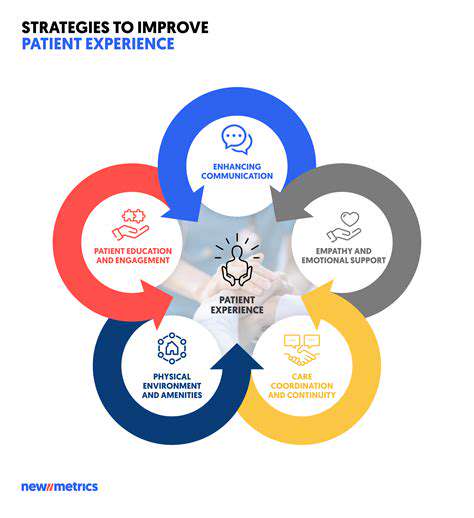

Interpretable models revolutionize healthcare by making diagnostic AI collaborative tools rather than mysterious oracles. When a predictive model flags a patient's high diabetes risk, clinicians appreciate seeing which factors - from lab results to lifestyle indicators - contributed most to the assessment. This shared understanding improves treatment planning and patient education.

Transparent models also help identify when certain demographics might be underrepresented in training data, prompting teams to address gaps before real-world deployment. The result? Healthcare AI that works reliably across diverse populations.

Financial Sector Applications

Banks now demand interpretability in credit scoring and fraud detection systems. Loan officers need to explain decisions to applicants, while regulators require proof that algorithms don't discriminate. Modern XAI solutions generate compliant documentation automatically, detailing exactly which financial behaviors triggered alerts or approvals.

This transparency also helps financial institutions refine their models. When a fraud detection system flags too many false positives, analysts can drill into the decision patterns and adjust weightings appropriately.

Customer Experience Optimization

Marketing teams leverage interpretable churn prediction models to develop targeted retention strategies. Rather than guessing why customers leave, they can see which service aspects or pricing factors most influence attrition risk. Some companies even build these insights directly into their CRM systems, triggering personalized interventions when risk factors appear.

Environmental Protection

Climate scientists use interpretable models to prioritize conservation efforts. When a system predicts ecological threats, researchers can identify whether industrial activity, temperature changes, or other factors drive the forecast. This precision helps allocate limited resources where they'll make the most difference.

Similar approaches help predict natural disasters with greater accuracy. Understanding which early warning signs matter most allows communities to prepare more effectively for events like wildfires or floods.

Language Technology Breakthroughs

Modern sentiment analysis tools don't just output scores - they highlight the specific phrases that swayed their judgment. This helps brands understand customer feedback at scale while avoiding misinterpretations of sarcasm or cultural context. The same technology powers more transparent content moderation systems that can justify their decisions to affected users.

Social Policy Innovation

Urban planners and policymakers employ interpretable models to allocate public resources effectively. When predictive policing systems identify crime hotspots, officials can examine whether socioeconomic factors, urban design, or other variables contribute most to the prediction. This informs more holistic solutions than simple enforcement increases.