Algorithmic Transparency and Explainability

Demystifying Algorithmic Decisions

True transparency in educational AI systems requires more than superficial explanations of inputs and outputs. Educators need meaningful insight into the reasoning processes behind automated recommendations to assess their validity and potential biases. This level of understanding enables teachers to identify and address systemic issues that might otherwise go unnoticed.

When the inner workings of these systems remain opaque, it undermines trust among educators and students alike. This is particularly problematic when algorithms influence critical educational decisions that shape students' academic trajectories.

The Imperative of Explainable AI

Beyond basic transparency, explainability dives deeper into the rationale behind algorithmic outputs. In educational contexts, this means helping teachers understand why a system might recommend specific interventions for certain students. Such insights allow for more nuanced instructional adjustments that address individual learning needs.

Identifying and Correcting Systemic Biases

Algorithmic systems in education can inadvertently replicate societal inequities if not carefully designed. Historical data reflecting gender or racial disparities might lead to biased resource recommendations or learning path suggestions. Proactive measures to audit training data and correct identified biases must become standard practice.

The integrity of educational AI depends fundamentally on the quality and representativeness of its training data. Regular evaluations can help identify and mitigate patterns that might disadvantage specific student populations.

Educators as Critical Evaluators

Teachers occupy a vital position in ensuring ethical AI implementation. By critically examining algorithmic outputs and discussing their implications with students, educators can foster important conversations about technology's role in society. This includes analyzing the limitations of automated systems and reaffirming the value of human judgment in learning environments.

Professional development programs should equip educators with the analytical tools needed to navigate increasingly algorithm-driven educational landscapes.

Balancing Innovation and Ethics

The educational applications of AI raise significant questions about student privacy and data protection. Clear policies must govern how sensitive information is collected, stored, and utilized. Equally important are mechanisms for holding system developers accountable for algorithmic decisions that produce harmful outcomes.

Ongoing evaluation processes should assess whether these technologies inadvertently reinforce existing social inequalities, with adjustments made to ensure equitable access to educational opportunities.

Mitigating Bias in Evaluation and Assessment

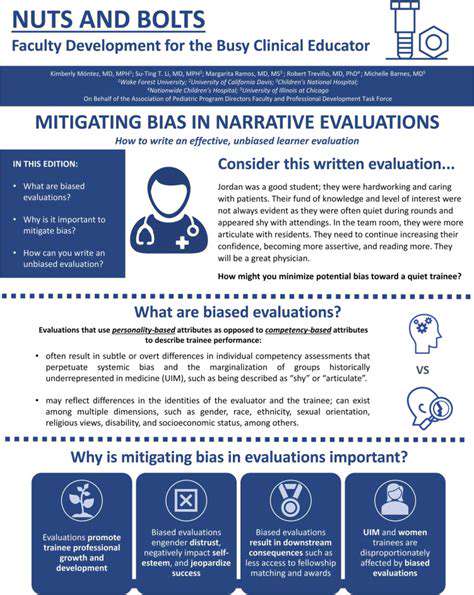

Recognizing Evaluation Biases

Evaluation processes inherently carry the potential for bias, whether from conscious prejudices or unconscious assumptions. The first step toward fair assessment involves acknowledging these potential distortions. This requires examining evaluator backgrounds, assessment criteria, and contextual factors that might influence judgments.

Unconscious biases pose particular challenges as they operate below our awareness. Common examples include favoring applicants with similar backgrounds or selectively noticing information that confirms preexisting beliefs. Combating these tendencies requires deliberate effort and structured self-reflection.

Creating Objective Assessment Frameworks

Well-defined, measurable criteria form the foundation of unbiased evaluation. Vague standards leave too much room for subjective interpretation, while specific benchmarks promote consistency. Developing these criteria should involve diverse stakeholders to ensure comprehensive coverage of relevant competencies.

Assessment rubrics should focus on observable behaviors and demonstrable skills rather than personality traits or cultural preferences. Regular reviews help identify and eliminate potential bias in evaluation instruments.

Implementing Bias-Reduction Strategies

Comprehensive training programs can help evaluators recognize and counteract their unconscious biases. These programs should provide practical techniques for maintaining objectivity and considering diverse perspectives. Including evaluators from varied backgrounds further enhances assessment fairness through multiple viewpoints.

Standardized rating scales and multiple evaluators help minimize individual subjectivity. These approaches should undergo continuous refinement to address emerging best practices in equitable assessment.

Promoting Transparency in Evaluation

Clear communication about assessment processes builds trust among participants. Detailing evaluation criteria, procedures, and appeal mechanisms demonstrates commitment to fairness. Constructive feedback should highlight specific areas for improvement rather than subjective impressions.

Establishing formal channels for addressing evaluation concerns allows for continuous process improvement. This feedback loop helps maintain assessment integrity over time.

The Role of Human Oversight and Ethical Frameworks

Navigating AI Ethics in Education

AI's educational applications present both opportunities and ethical dilemmas. While these technologies can personalize learning and streamline administration, they require robust oversight to prevent unintended harm. Careful examination of training data and potential impacts is essential before deploying AI systems in classrooms.

Developing comprehensive ethical guidelines should address data privacy, decision transparency, and clear accountability. Students' personal information deserves stringent protections, and all stakeholders should understand how automated decisions are made. Those responsible for AI systems must be answerable for their outcomes.

Maintaining Human Judgment in Digital Education

Educators must retain the ability to critically assess AI-generated recommendations rather than accepting them uncritically. Professional development programs should help teachers interpret algorithmic outputs and recognize their limitations. This human oversight ensures technology enhances rather than replaces the educator's professional judgment.

AI should function as a supportive tool, not a replacement for human interaction in education. The invaluable elements of empathy and personalized attention require human educators, with technology serving to augment rather than supplant these qualities.

Involving diverse community members in developing AI guidelines helps ensure these systems reflect collective values. This collaborative approach promotes responsible implementation that serves all students equitably.