Defining Facial Recognition Bias

Facial recognition technology, often perceived as neutral, harbors deeply ingrained biases. These distortions emerge from the very data used to train these systems, which frequently overrepresents specific population segments. The consequence? A flawed mechanism prone to errors and unjust outcomes, disproportionately affecting marginalized communities. Grasping this systemic shortcoming is vital for fostering ethical AI development.

This isn't merely about occasional mistakes—it's a structural issue reinforcing societal inequities. Manifestations range from misidentification to skewed detection rates, undermining both the fairness and dependability of this influential technology.

Data Sets and Their Impact

Training facial recognition models requires massive image collections. When these datasets lack diversity or fail to mirror real-world demographics, the algorithms inherit and amplify existing prejudices. This becomes especially problematic when certain ethnicities, genders, or age groups are underrepresented, creating systems with limited applicability.

Consider a dataset dominated by lighter-skinned individuals: the resulting algorithm will likely falter when processing darker complexions. Such deficiencies underscore the urgent need for comprehensive, representative training data to develop equitable facial recognition tools.

Algorithmic Design and Bias

Bias can originate from the algorithm's architecture itself. Certain facial characteristics—like skin tone or facial structure—might receive disproportionate emphasis during analysis, generating distorted outputs. Typically, this stems not from deliberate design choices but from imbalanced training data influencing the algorithm's learning process.

The Role of Developers in Mitigation

Tech professionals bear significant responsibility in combating facial recognition bias. They must rigorously test for potential prejudices and implement corrective measures. Key strategies include:

- Curating diverse, representative datasets

- Applying techniques to counterbalance existing disparities

- Conducting ongoing performance evaluations across demographic groups

Ethical Implications and Societal Impact

The consequences of biased facial recognition extend far beyond technical limitations. Faulty identifications can trigger wrongful accusations, discriminatory practices in employment and services, and eroding public trust in technology. Such outcomes carry severe repercussions for individuals and society at large.

Addressing Bias Through Responsible AI Practices

Combating facial recognition bias demands a multidimensional strategy. Beyond technical fixes, it requires:

- Ethical guidelines for development

- Collaboration between technologists, ethicists, and policymakers

- Robust governance frameworks ensuring accountability

Future Directions for Research and Development

Continued innovation is essential to resolve facial recognition biases. Priorities include:

- Developing novel algorithm training methodologies

- Creating effective bias detection and correction tools

- Enhancing transparency throughout system design and operation

The ultimate objective? Creating facial recognition that's not just accurate, but genuinely equitable for all users.

Autonomous Weapons Systems: The Question of Accountability

Defining Autonomous Weapons Systems

Autonomous weapons systems (AWS) represent military technology capable of independently selecting and engaging targets. By leveraging AI and machine learning, these systems process enormous data volumes to identify and neutralize threats. Their potential for independent operation sparks critical ethical debates surrounding accountability and unintended consequences.

Understanding AWS varieties—from basic target recognition to advanced decision-making platforms—is essential for proper ethical assessment. This includes examining each system's autonomy level and how it influences operational decisions.

The Ethical Dilemma of Lethal Autonomous Weapons

The most pressing ethical concern involves lethal force application without human oversight. This creates profound accountability questions, particularly regarding civilian casualties. When systems err or cause harm, who bears responsibility? The absence of human judgment in critical decisions presents a formidable ethical challenge.

Accountability and International Law

Current international humanitarian law, designed for human combatants, proves inadequate for AWS scenarios. Legal frameworks require substantial revision to address:

- State and manufacturer liabilities

- Operator responsibilities

- Situations with minimal human oversight

The Risk of Unintended Consequences

Complex AWS algorithms carry inherent risks:

- Potential for catastrophic errors

- Unintended civilian targeting

- Creation of new battlefield vulnerabilities

Proactive measures are essential to mitigate these security threats.

The Impact on Human Control and Decision-Making

Increasing AWS reliance risks diminishing human authority in military operations. Concerns include warfare dehumanization and loss of critical judgment in life-or-death situations. Maintaining meaningful human control remains an ethical imperative.

Transparency and Explainability in AI Systems

Opaque AI algorithms in AWS hinder:

- Bias assessment

- Error detection

- Accountability determination

Developing transparent, explainable systems is crucial for establishing trust.

Public Engagement and Ethical Frameworks

Responsible AWS development requires:

- Multidisciplinary stakeholder input

- Open public discourse

- Comprehensive ethical guidelines emphasizing:

- Accountability

- Proportionality

- Human oversight

Algorithmic Decisions in Hiring and Lending: The Impact on Opportunity

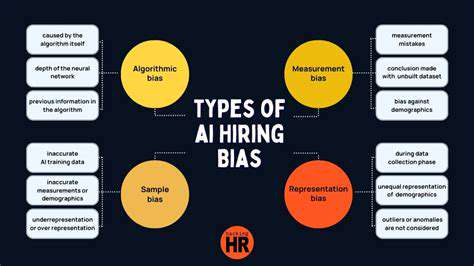

Algorithmic Bias and Fairness

Automated hiring platforms promise efficiency but risk perpetuating societal prejudices if improperly designed. These biases frequently disadvantage specific demographic groups through:

- Discriminatory candidate scoring

- Unfair screening outcomes

Historical hiring data often contains embedded biases that algorithms may unintentionally amplify. Addressing these issues is critical for equitable employment practices.

Data Collection and Representation

Training data quality directly impacts algorithmic fairness. Limited datasets—focusing on particular:

- Educational backgrounds

- Professional experiences

- Geographic regions

—may overlook qualified candidates from diverse circumstances. Comprehensive data collection across multiple dimensions helps mitigate these limitations.

Transparency and Explainability

Understanding hiring algorithm decision-making is fundamental for:

- Building organizational trust

- Ensuring accountability

Explainable AI (XAI) techniques illuminate the decision pathway, allowing identification and correction of potential biases.

Impact on Candidate Experience

Poorly implemented hiring algorithms create negative applicant experiences through:

- Excessive automated screenings

- Lack of human interaction

- Insufficient feedback

Optimizing candidate journeys strengthens employer brands and attracts top talent.

Ethical Considerations in Algorithmic Hiring

Algorithmic recruitment raises multiple ethical concerns requiring:

- Continuous bias monitoring

- Regular system audits

- Clear usage guidelines

Transparency and accountability form the foundation for ethical automated hiring practices.

Few culinary traditions match fondue's ability to transform dining into communal theater. Imagine: an alpine chalet's wooden table, echoing laughter, and a central pot of melted cheese. This Swiss innovation—originally a practical solution for winter cheese preservation—has evolved into a global celebration of connection through cuisine.

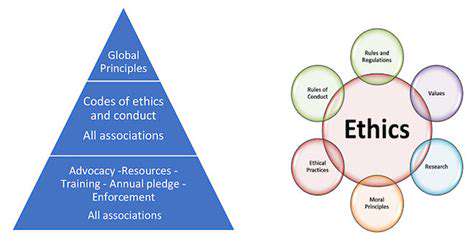

The Role of Ethical Frameworks and Regulations

Ethical Considerations in AI Development

AI's expanding role across industries makes ethical development crucial. As systems grow more autonomous, addressing potential harms becomes increasingly urgent. Key priorities include:

- Identifying and mitigating biases

- Ensuring decision-making transparency

- Preventing inequality reinforcement

Bias and Fairness in AI Algorithms

Algorithmic bias remains a significant challenge. Effective solutions involve:

- Comprehensive data vetting

- Bias-aware algorithm design

- Diverse stakeholder input during development

Transparency and Explainability in AI

The black box problem in AI necessitates:

- Clear decision pathway documentation

- Explainable AI implementation

- Regular third-party audits

Transparent systems foster trust and enable proper oversight.

Accountability and Responsibility in AI Systems

Determining liability for AI errors requires:

- Clear role definitions

- Continuous performance monitoring

- Robust recourse mechanisms

Privacy Concerns and Data Security

AI's data requirements mandate:

- Strong encryption protocols

- Strict access controls

- Comprehensive privacy policies

The Impact on Human Work and Society

Addressing AI's workforce implications involves:

- Large-scale retraining initiatives

- New employment opportunity creation

- Inclusive public dialogue about technology's societal role