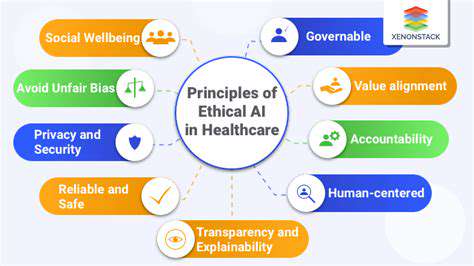

Key Principles of Ethical AI Governance

Fairness and Non-Discrimination

Creating AI systems that uphold ethical standards requires deliberate efforts to prevent the reinforcement of societal biases. Engineers and developers must scrutinize potential disparities in outcomes linked to race, gender, socioeconomic status, or other protected attributes. Proactive measures like data preprocessing, algorithmic adjustments, and continuous oversight are indispensable for minimizing bias. Neglecting these steps risks producing discriminatory results, which can deepen existing inequalities and erode public confidence.

Additionally, achieving fairness hinges on a thorough analysis of training data. When datasets mirror real-world prejudices, the resulting AI models inherit these flaws, perpetuating damaging stereotypes. Detecting and correcting biased data is therefore a foundational step in developing equitable AI solutions.

Transparency and Explainability

Clarity in AI decision-making processes is critical for fostering trust and accountability. Many advanced algorithms function as black boxes, obscuring the logic behind their outputs. Prioritizing transparent design practices enables stakeholders to comprehend how decisions are made and spot potential errors or biases. Detailed documentation of inputs, methodologies, and outputs is essential for achieving this goal.

Explainable AI (XAI) methods help demystify complex systems by making their operations more interpretable. Adopting these techniques allows users to grasp the rationale behind AI-generated decisions, thereby increasing confidence in the technology's reliability.

Accountability and Responsibility

Assigning liability for AI-related errors or harms presents significant challenges. Clear accountability frameworks must be established to ensure developers and deployers answer for their systems' actions. This includes creating pathways for recourse when AI causes damage. Such measures are crucial for maintaining trust and preventing reckless implementation.

Well-defined roles throughout the AI lifecycle are equally important. Implementing structured processes to identify, reduce, and address potential risks helps safeguard against unintended consequences.

Privacy and Data Security

The extensive personal data requirements of AI systems raise serious privacy issues. Safeguarding user information through strict security protocols is fundamental to ethical AI deployment. Compliance with data protection laws, combined with robust encryption and access controls, helps prevent unauthorized use of sensitive data. Obtaining explicit user consent and employing anonymization techniques further strengthen privacy protections.

Vigilant data security measures are essential for preventing privacy violations and protecting individuals from potential harm. Regular security audits and comprehensive encryption protocols form critical components of this defensive approach.

Beneficial Use and Societal Impact

AI development should prioritize societal benefits while minimizing potential drawbacks. This necessitates evaluating broader impacts on employment, economic systems, and social dynamics. Aligning AI systems with human values and collective welfare represents a cornerstone of ethical governance. Thorough impact assessments and sustained stakeholder engagement are vital for achieving this alignment.

Encouraging responsible innovation requires concerted effort. Developing ethical guidelines, sharing best practices, and fostering collaboration across disciplines helps ensure AI serves the public good.

Addressing Bias and Fairness in AI Systems

Understanding the Scope of Bias

Despite perceptions of objectivity, AI systems frequently inherit biases from their training data. These distortions, whether from societal prejudices or unrepresentative datasets, can produce unfair results. Detecting and mitigating these biases is essential for creating equitable AI applications. Recognizing potential bias across different models and algorithms forms the basis for effective intervention.

Unchecked bias can cause anything from minor prediction errors to substantial outcome disparities between demographic groups. For instance, facial recognition systems predominantly trained on lighter-skinned individuals often struggle with accurate identification of darker-skinned people. This demonstrates how training data limitations directly translate into algorithmic prejudice.

Mitigating Bias Through Data Preparation

Comprehensive data preparation is paramount for reducing AI bias. This involves rigorous examination of datasets to identify and correct imbalances that might introduce prejudice. Techniques like outlier removal and inconsistency correction can substantially improve system fairness.

Strategies such as data augmentation and demographic balancing help create more representative datasets. Building comprehensive, balanced training sets addresses bias at its source by ensuring equitable representation. Methods including resampling and strategic oversampling/undersampling can effectively counteract data imbalances.

Promoting Fairness Through Algorithm Design

Algorithm architecture plays an equally crucial role in ensuring fairness. Designing models with built-in bias mitigation features can prevent discriminatory outcomes. Incorporating fairness constraints during optimization helps prevent skewed predictions favoring specific groups.

Fairness-aware learning techniques provide another valuable approach. These methods discourage biased patterns by penalizing unfair predictions during training. Such deliberate design choices are indispensable for developing AI systems that are both precise and equitable.