Beyond the Basics: Exploring Natural Speech Mimicry

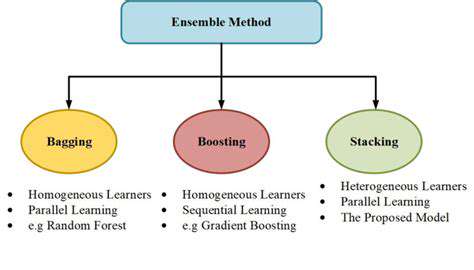

Modern speech synthesis has evolved far beyond basic text-to-speech functionality. Cutting-edge voice generation systems now replicate the full spectrum of human vocal expression, capturing the subtle dance of pitch fluctuations, rhythmic variations, and emotional coloring that makes natural speech so compelling. These technological advancements create synthetic voices that don't just convey information but establish genuine human connection through their realism. The engineering behind this breakthrough involves multiple neural networks working in concert to analyze and recreate the complex patterns found in spontaneous human speech.

What truly separates next-generation systems from their predecessors is their capacity for contextual interpretation. Rather than simply converting text to sound, these platforms analyze the underlying meaning and emotional content of words, enabling them to modulate vocal delivery in ways that feel organic and appropriate to the situation.

The Role of Contextual Understanding

Effective speech synthesis demands sophisticated contextual awareness. Modern systems don't just process words in isolation - they analyze entire sentences and paragraphs to understand how emphasis, pacing, and inflection should vary based on meaning. This deeper comprehension allows for the subtle vocal nuances that make synthetic speech indistinguishable from human voices.

Consider the phrase That's great - its meaning shifts dramatically based on delivery. Said with rising inflection, it expresses genuine enthusiasm. With flat intonation, it might convey sarcasm or disinterest. Advanced systems can detect these contextual clues from surrounding text and adjust vocal output accordingly.

Advanced Acoustic Modeling for Realism

The quest for vocal realism has led to breakthroughs in acoustic modeling. Today's models don't just reproduce phonemes - they simulate the entire vocal apparatus, accounting for factors like breath control, articulator positioning, and even minor imperfections that characterize natural speech. This comprehensive approach yields synthetic voices with rich, human-like texture and variability.

These models undergo extensive training using diverse speech datasets representing different ages, accents, and speaking styles. The result is synthetic voices that capture the beautiful imperfections of human speech - the slight vocal fry, the occasional breath sounds, the natural variations in volume that make speech feel alive.

Emotional Nuances and Intonation

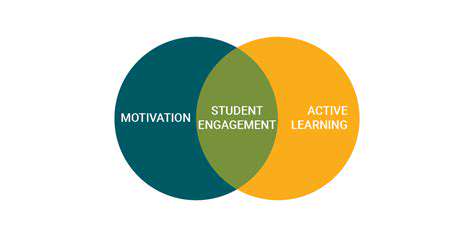

The most sophisticated speech synthesis systems now incorporate emotional intelligence. By analyzing text for emotional cues, they can adjust vocal qualities to match the intended sentiment. A system might brighten its tone for joyful content, adopt a slower pace for serious material, or add subtle tremolo for emotional passages - creating an experience that resonates with listeners on a deeper level.

This emotional attunement proves particularly valuable in applications like audiobook narration or virtual therapy assistants, where appropriate vocal expression significantly impacts user engagement and effectiveness.

The Importance of Speaker Variability

Human voices vary tremendously based on physiology, background, and momentary state. Advanced synthesis systems embrace this diversity by offering customizable vocal parameters that go beyond simple pitch and speed adjustments. Users can fine-tune characteristics like vocal tract length, harmonic emphasis, and even personality markers to create voices that suit specific contexts.

This flexibility opens new possibilities for personalized digital assistants, educational tools, and entertainment applications where voice character plays a crucial role in user experience. Imagine language learning software that provides regionally appropriate pronunciation models, or gaming platforms that generate unique character voices on demand.

The Future of Conversational AI

As speech synthesis approaches human parity, we stand at the threshold of truly natural human-computer interaction. Future systems won't just understand our words - they'll comprehend our emotional state through vocal analysis and respond with appropriately nuanced speech. This advancement promises to revolutionize everything from customer service interactions to mental health support applications.

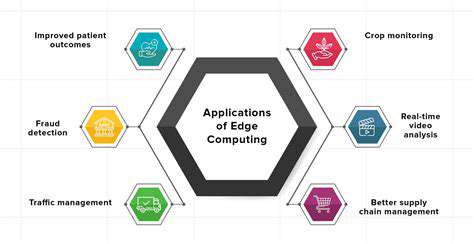

Applications Across Industries

Voice Cloning and Realistic Speech Synthesis

The field of voice replication technology has made astonishing progress, enabling the creation of synthetic voices that capture an individual's unique vocal fingerprint. Beyond basic voice characteristics, these systems can reproduce personal speech patterns, habitual phrasing, and even unconscious vocal tics. This capability has profound implications for archival and educational applications - we can now hear historical speeches delivered in their original voices, or create interactive learning experiences with personalized narration.

In media production, this technology allows for unprecedented creative possibilities. Directors can adjust dialogue delivery in post-production without recalling actors, or create synthetic voices for animated characters that maintain perfect consistency throughout long production schedules.

Accessibility and Inclusivity

Voice synthesis technology is breaking down communication barriers for individuals with speech impairments. Modern systems can create personalized synthetic voices for those who have lost their natural speaking ability, preserving their vocal identity. For language learners, these tools provide authentic pronunciation models that adapt to the learner's native language influences, creating more effective and personalized instruction.

The potential extends to creating communication aids for nonverbal individuals that sound natural and age-appropriate, significantly improving social integration and quality of life. This represents one of the most meaningful applications of speech synthesis technology.

Entertainment and Creative Applications

The entertainment industry stands transformed by advanced speech synthesis. Audiobook platforms can now offer multiple narration styles for the same title, allowing listeners to choose their preferred voice characteristics. In gaming, dynamic voice generation enables non-player characters to deliver context-appropriate dialogue with natural variation, eliminating the repetitive patterns that break immersion in traditional games.

Filmmakers can use this technology for historical recreations or to seamlessly dub content into multiple languages while preserving the original performances' emotional authenticity. The creative possibilities are limited only by imagination.

Customer Service and Virtual Assistants

Businesses are leveraging customized voice synthesis to create branded virtual assistants with distinct, recognizable voices. These systems can adjust their speaking style based on caller demographics or emotional state, creating more effective and satisfying interactions. By matching vocal characteristics to specific customer segments or cultural contexts, companies can build stronger emotional connections while maintaining consistent brand messaging across all touchpoints.

The technology also enables true multilingual support - a single virtual assistant can converse fluently in multiple languages while maintaining a consistent vocal identity, dramatically improving the customer experience for global brands.