Data Acquisition Strategies

Effective data collection hinges on a robust strategy tailored to the specific research objectives. This involves careful consideration of the data sources available, their reliability, and the potential biases inherent in each. Identifying the most relevant data sources is crucial for ensuring the accuracy and validity of the subsequent analysis. Researchers must meticulously document the selection criteria and procedures used to collect the data, which will be essential for maintaining transparency and reproducibility.

Different data collection methods, such as surveys, experiments, and observational studies, each possess unique strengths and weaknesses. Understanding these nuances will help researchers choose the optimal approach for their research questions. Furthermore, the chosen methodology significantly impacts the scope and limitations of the analysis.

Data Validation and Cleaning

Data validation is a critical step in the data processing pipeline. This process involves scrutinizing the collected data to identify inconsistencies, errors, and outliers. The goal is to ensure the integrity and reliability of the data before proceeding with further analysis. Cleaning the data involves correcting errors, handling missing values, and transforming data into a suitable format for analysis.

Careful attention to data validation and cleaning is essential to avoid misleading results. Data inaccuracies can significantly skew the interpretation of findings and ultimately compromise the value of the research. A robust validation process is therefore paramount in ensuring the quality and reliability of the data analysis.

Data Transformation Techniques

Data transformation is a crucial step in preparing data for analysis. This process often involves converting data from one format to another, or applying mathematical functions to modify the data. Examples include converting categorical variables to numerical representations or standardizing data to a common scale.

Appropriate data transformation techniques can significantly improve the interpretability and efficiency of the analysis. Selecting the right transformation method depends on the nature of the data and the specific research objectives. For instance, logarithmic transformations can be used to address skewed distributions, while standardization can be used to compare variables measured on different scales.

Data Storage and Management

Robust data storage and management practices are essential to ensure data accessibility, security, and long-term preservation. This involves selecting appropriate storage systems and implementing data backup and recovery strategies. Secure data storage is paramount to protect sensitive information and maintain confidentiality.

Effective data management practices also include establishing clear metadata standards, creating detailed documentation, and ensuring data accessibility for future analysis or collaboration.

Statistical Analysis Techniques

Appropriate statistical techniques are essential for extracting meaningful insights from the processed data. The choice of technique depends on the nature of the data and the research question. Descriptive statistics, for example, are used to summarize and describe the data, while inferential statistics are used to draw conclusions about a larger population based on a sample.

Statistical analysis allows for a deeper understanding of patterns and relationships within the data. Selecting the appropriate statistical methods is crucial for drawing valid conclusions from the data.

Data Visualization Methods

Data visualization techniques are powerful tools for exploring and communicating patterns, trends, and insights within data. Visual representations, such as charts and graphs, can make complex datasets more accessible and understandable. Effective visualization can significantly enhance the clarity and impact of research findings.

Choosing the right visualization method is crucial for conveying the intended message. Different types of visualizations are appropriate for different types of data and analysis goals. For instance, bar charts are effective for comparing categorical data, while scatter plots are suitable for identifying correlations between variables.

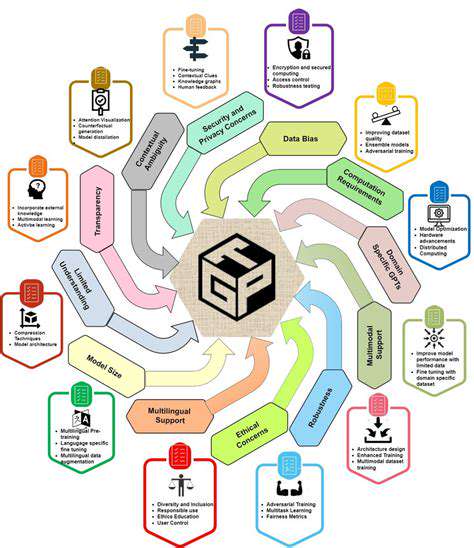

Ethical Considerations in Data Handling

Ethical considerations are paramount throughout the data collection and processing stages. Researchers must adhere to ethical guidelines for data privacy, confidentiality, and informed consent. Data anonymization and secure storage are essential to protect the privacy of individuals or entities involved.

Transparency and accountability are vital aspects of ethical data handling. Researchers should meticulously document all procedures, including data collection methods, analysis techniques, and potential biases. This transparency allows for scrutiny and reproducibility of the research findings.

Real-Time Anomaly Detection and Predictive Modeling: Proactive Maintenance Strategies

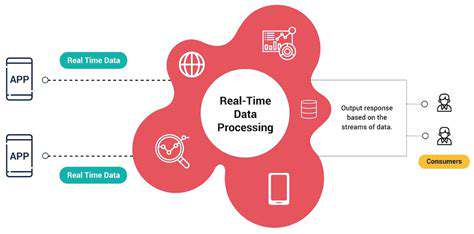

Real-Time Anomaly Detection: The Foundation for Proactive Maintenance

Real-time anomaly detection systems are crucial for proactive maintenance strategies. These systems continuously monitor equipment performance, identifying deviations from expected behavior in real-time. This capability allows for swift responses to potential issues, preventing costly breakdowns and maximizing operational efficiency. By analyzing sensor data, historical trends, and other relevant factors, these systems can flag unusual patterns that might indicate impending failures. This early warning system is a cornerstone of predictive maintenance, enabling technicians to schedule maintenance proactively rather than reactively.

The effectiveness of anomaly detection relies heavily on the quality and volume of data being processed. Sophisticated algorithms and machine learning models are employed to identify patterns and anomalies that are otherwise difficult to spot by human observation. This allows for a more comprehensive understanding of equipment health, enabling more informed decisions about maintenance schedules and resource allocation.

Predictive Modeling: Forecasting Equipment Failures

Predictive modeling takes the insights from real-time anomaly detection and extends them into the future. By leveraging historical data, sensor readings, and environmental factors, these models can forecast the likelihood of equipment failures with a certain degree of accuracy. This capability empowers maintenance teams to schedule preventative maintenance tasks in advance, minimizing downtime and maximizing equipment lifespan. The predictive models are constantly refined using new data, ensuring that the accuracy of the forecasts improves over time.

Different predictive models can be employed depending on the type of equipment and the nature of the data. For example, regression models might be used to predict the remaining useful life of a component based on its current operating conditions. Alternatively, machine learning algorithms like support vector machines or neural networks can identify complex patterns and relationships in the data to produce more accurate predictions. This flexibility allows for a tailored approach to forecasting, addressing the specific needs of different industries and equipment types.

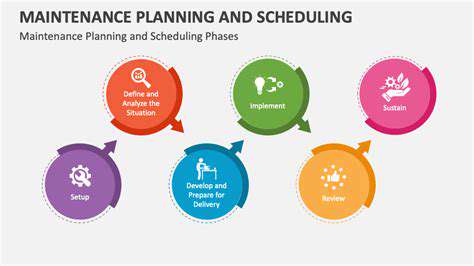

Proactive Maintenance Strategies: Optimizing Operational Efficiency

Implementing proactive maintenance strategies based on real-time anomaly detection and predictive modeling yields significant benefits. Proactive maintenance strategies allow for a shift from reactive to preventative maintenance, reducing unexpected downtime and associated costs. The ability to predict potential failures empowers maintenance teams to schedule maintenance tasks at optimal times, minimizing disruptions to production schedules. This optimized approach also allows for better resource allocation, ensuring that maintenance personnel and resources are utilized effectively. The proactive approach fosters a more efficient and reliable operational environment, leading to significant cost savings and increased productivity.

Beyond cost savings, proactive maintenance strategies contribute to improved safety by identifying potential hazards early. Predicting potential failures often reveals underlying issues that could lead to serious accidents. This aspect of proactively addressing potential problems is vital for creating a safer work environment and minimizing the risk of catastrophic events. A culture of proactive maintenance fosters a more reliable and sustainable operational framework.

The integration of these strategies also leads to improved asset utilization. By ensuring that equipment is maintained in optimal condition, companies can maximize their return on investment. This translates to higher operational efficiency, improved product quality, and ultimately, increased profitability. This comprehensive approach leads to a more sustainable and reliable operational environment.

Ayurveda identifies three primary doshas: Vata, Pitta, and Kapha. Each dosha is associated with specific qualities and functions within the body. Vata governs movement and change, Pitta governs metabolism and transformation, and Kapha governs structure and stability.

Security Considerations and Data Management in Edge Computing Environments

Data Security at the Edge

Protecting sensitive data at the edge is paramount, as data often traverses various networks and devices before reaching the central data center. This necessitates robust encryption protocols throughout the entire data lifecycle, from initial collection to final storage. Implementing secure communication channels, such as TLS/SSL, between edge devices and the cloud is crucial to prevent unauthorized access and data interception. Furthermore, access controls and authentication mechanisms should be meticulously designed to restrict data access to authorized personnel only, ensuring compliance with relevant security regulations and policies.

Implementing intrusion detection and prevention systems (IDS/IPS) at the edge is vital to identify and mitigate potential security threats in real-time. These systems should be configured to detect malicious activities, such as unauthorized access attempts, malware infections, and denial-of-service attacks, and promptly alert the appropriate personnel for immediate action. Regular security audits and vulnerability assessments are critical to proactively identify and address potential weaknesses in the edge infrastructure and applications, strengthening the overall security posture.

Data Management Challenges in Distributed Environments

Managing data across a distributed edge computing network presents significant challenges. Maintaining data consistency and integrity across multiple, potentially disparate, edge devices and data centers requires sophisticated data management strategies. Version control and data lineage tracking are essential to ensure data accuracy and traceability, especially in scenarios involving data updates and modifications. Effective data governance policies and procedures must be established to standardize data formats, quality, and access controls across the entire network, ensuring data consistency and minimizing errors.

Scalability and performance are critical considerations in edge data management. Data volumes can increase exponentially as the number of edge devices and data sources proliferate. Efficient data storage solutions, optimized for high throughput and low latency, are essential for handling these increasing volumes without compromising performance. Data compression techniques and caching strategies can significantly improve data transfer speeds and reduce the load on the central data center, ensuring seamless data access and processing.

Privacy and Compliance Considerations

Ensuring data privacy and compliance with relevant regulations is essential in edge computing environments. Data minimization techniques should be employed to collect only the necessary data, reducing the risk of unauthorized data disclosure and misuse. Data anonymization and pseudonymization methods can further enhance privacy protection, ensuring that sensitive information is not inadvertently exposed to unauthorized individuals or entities. Compliance with data privacy regulations, such as GDPR or CCPA, is crucial to avoid potential legal issues and maintain trust with users and stakeholders.

Data security policies must be aligned with industry best practices and relevant regulations. Regular reviews and updates to these policies are essential to address evolving threats and compliance requirements. Clear communication channels and training programs for all personnel involved in data management and security are also vital to ensure everyone understands and adheres to the established policies. Continuous monitoring and auditing of data handling processes are key to maintaining compliance and minimizing potential risks.