Challenges and Considerations in GAN Training

Data Quality and Quantity

The foundation of any successful GAN implementation lies in its training data. Garbage in equals garbage out holds particularly true here - biased or limited datasets will inevitably produce flawed outputs. For example, a facial generation model trained predominantly on one ethnicity will struggle to create diverse representations. Similarly, insufficient training samples lead to repetitive, unimaginative outputs that fail to capture the full richness of the target domain.

Model Architecture Choice

Selecting the right network structures requires careful consideration of the data type and desired outcomes. For visual data, convolutional layers typically form the backbone, while sequential data might benefit from recurrent or attention-based architectures. The depth and complexity must strike a balance - too simple and the model can't capture necessary patterns; too complex and training becomes unwieldy or prone to overfitting.

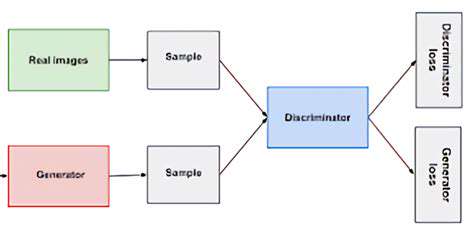

Training Stability and Convergence

Maintaining equilibrium during training resembles keeping two spinning plates balanced simultaneously. Various techniques help stabilize this process: gradient penalties prevent overly aggressive updates, spectral normalization controls weight magnitudes, and specialized loss functions provide more meaningful feedback. Monitoring tools like loss curves and sample quality over time help practitioners identify and address instability early.

Computational Resources

The resource requirements for training sophisticated GANs shouldn't be underestimated. High-resolution image generation might demand multiple high-end GPUs running for days or weeks. Memory constraints can limit batch sizes, affecting gradient estimates and ultimately model performance. Cloud computing has democratized access to some extent, but significant projects still require careful resource planning and allocation.

Evaluating Generated Samples

Quantifying success remains one of the trickiest aspects of GAN development. While metrics like FID and Inception Score provide numerical benchmarks, they don't always align with human perception of quality. Many teams implement a two-pronged approach: quantitative metrics for quick comparisons supplemented by human evaluation for final quality assessment, especially in creative applications where subjective qualities matter most.

Handling Mode Collapse

When a generator settles into producing just a few variations rather than exploring the full data space, we encounter mode collapse. This frustrating phenomenon can stem from various causes: an overpowered discriminator, insufficient model capacity, or problematic loss landscapes. Modern approaches like unrolled GANs, minibatch discrimination, and diverse regularization techniques help maintain output diversity throughout training.

Addressing Vanishing Gradients

As with many deep learning systems, GANs can suffer from gradients that become too small to drive meaningful learning, particularly in deeper architectures. Architectural choices like residual connections, careful initialization schemes, and alternative activation functions help maintain healthy gradient flow. Some newer approaches even periodically inject noise or use auxiliary networks to revitalize stuck training processes.

The Future of GANs in Data Synthesis

Generative Adversarial Networks (GANs) and Data Augmentation

GANs are transforming how we approach data scarcity across industries. Rather than painstakingly collecting more real-world examples, practitioners can now generate high-quality synthetic data that preserves statistical properties while expanding dataset size. This capability is revolutionizing fields like medical imaging, where patient data is both scarce and sensitive, yet critical for developing robust diagnostic algorithms.

Applications in Various Fields

The potential applications continue multiplying as the technology matures. Architects use GANs to generate building designs, game developers populate virtual worlds with unique assets, and manufacturers simulate product variations before physical prototyping. In scientific research, GANs help model complex phenomena where real-world data collection proves impractical or dangerous, opening new avenues for discovery and innovation.

Overcoming Limitations and Challenges

Current research focuses on making GANs more reliable and accessible. Techniques like progressive growing, where models first learn low-resolution features before tackling finer details, have dramatically improved training stability. Meta-learning approaches help adapt pretrained models to new domains with limited data, while quantization and pruning reduce computational demands for deployment.

Ethical Considerations and Privacy

As synthetic data becomes indistinguishable from real data, ethical questions grow more pressing. We must develop robust frameworks to prevent misuse while encouraging beneficial applications. Differential privacy techniques, watermarking schemes, and clear disclosure requirements are emerging as important safeguards. The community continues debating appropriate use cases and developing best practices for responsible deployment.

The Future of GAN-Based Data Synthesis

Looking ahead, we're likely to see GANs become more specialized and efficient. Domain-specific architectures will emerge, optimized for particular data types and applications. Integration with other AI techniques like reinforcement learning and neural symbolic systems will enable even more sophisticated generation capabilities. As hardware improves and algorithms mature, what currently requires supercomputers may soon run on edge devices, opening new possibilities for real-time, on-demand data synthesis across countless applications.