Defining Bias in AI Algorithms

Bias in AI algorithms refers to systematic errors or deviations from accuracy that result in certain outcomes being favored over others. This can manifest in various ways, from skewed predictions to discriminatory outputs. Understanding the different types of bias, such as algorithmic bias, data bias, and selection bias, is crucial for evaluating the fairness and ethical implications of AI systems. Data bias arises from skewed or incomplete training datasets, potentially reflecting societal prejudices. Algorithmic bias is inherent in the design and structure of the algorithm itself. Selection bias occurs when the data used to train the algorithm is not representative of the population it aims to serve, leading to inaccurate or unfair outcomes.

Impact of Bias on Healthcare

The potential impact of biased AI algorithms in healthcare is significant and far-reaching. Inaccurate diagnoses, skewed treatment recommendations, and disparities in access to care are all possible consequences. Consider a diagnostic tool trained on data predominantly from one demographic group. This tool might perform poorly on individuals from other groups, leading to misdiagnosis and potentially life-threatening consequences. The consequences can be devastating, impacting individuals' health, well-being, and access to quality medical care.

Moreover, biased algorithms can exacerbate existing health disparities, leading to a widening gap in healthcare outcomes between different demographics. This could result in certain populations receiving inadequate care, while others receive superior care, highlighting the urgent need for fairness and equity in AI development.

Mitigating Bias in AI Models

Addressing bias in AI models requires a multifaceted approach that encompasses careful data curation, algorithm design, and ongoing evaluation. Careful consideration of the data used to train the AI models is crucial. The data should be diverse and representative of the population it aims to serve, ensuring that no particular group is underrepresented or overrepresented. In addition, the algorithms themselves should be designed with fairness in mind, employing techniques like fairness-aware learning to minimize the potential for bias.

Ethical Considerations in AI Development

Ethical considerations are paramount in the development and deployment of AI algorithms in healthcare. Transparency and explainability in AI systems are essential for building trust and ensuring accountability. Developers must be transparent about the data used to train the algorithms and explain the decision-making process of the AI system. This transparency allows stakeholders to understand how the AI arrives at its conclusions, enabling appropriate scrutiny and addressing potential biases.

Developing Fair and Equitable AI Systems

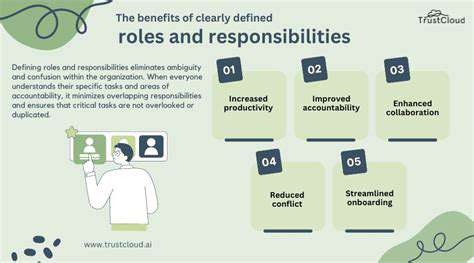

The development of fair and equitable AI systems requires a collaborative effort involving researchers, clinicians, ethicists, and policymakers. A multidisciplinary approach allows for diverse perspectives and ensures that AI systems are developed with the needs and values of diverse communities in mind. Establishing clear guidelines and regulations for the use of AI in healthcare will help to ensure that AI systems are deployed responsibly and ethically.

Accountability and Continuous Monitoring

Accountability is critical in the ongoing use of AI systems in healthcare. Mechanisms for monitoring and evaluating the performance of AI algorithms are essential to detect and correct biases as they emerge. Regular audits of AI systems and ongoing evaluation of their impact on different demographic groups are necessary to ensure fairness and equity. Feedback loops and mechanisms for addressing identified biases are crucial for continuous improvement in the performance and ethical use of AI in healthcare.

Data Privacy and Security: Protecting Sensitive Patient Information

Data Minimization and Purpose Limitation

Data minimization is a critical aspect of protecting patient information. This principle emphasizes collecting only the minimum amount of data necessary to achieve a specific, legitimate purpose. For example, if an AI system needs patient height and weight for a specific medical analysis, it should not collect additional data like social security numbers or addresses, unless absolutely essential for the same or a directly related purpose. This significantly reduces the potential for misuse or unauthorized access of sensitive data.

Purpose limitation goes hand-in-hand with data minimization. Once data is collected, it should be used only for the predefined, explicitly stated purposes. For instance, data collected for diagnosing a particular condition shouldn't be repurposed for marketing or other unrelated activities. This strict adherence to purpose limitation is crucial for maintaining patient trust and avoiding potential breaches of privacy.

Transparency and Explainability

AI systems handling patient data should be transparent in their decision-making processes. Patients should understand how the AI system arrives at diagnoses or treatment recommendations. Explainable AI (XAI) techniques can help in this area by providing insights into the reasoning behind the system's output. This transparency builds trust and allows for scrutiny, crucial for ensuring the AI's outputs are reliable and accurate.

Furthermore, clear communication channels about the use of AI in healthcare are essential. Patients should be informed about how their data is being used and who has access to it. This proactive approach fosters trust and empowers patients to make informed decisions about their health data.

Data Security and Access Control

Robust security measures are paramount to protect patient data from unauthorized access, use, disclosure, or destruction. This includes employing encryption, access controls, and regular security audits. AI systems need to be designed with inherent security protocols to prevent data breaches and protect sensitive information from malicious actors.

Access controls should be carefully implemented, limiting access to sensitive patient data only to authorized personnel. Strict adherence to these protocols helps ensure that only those with legitimate need to know can access patient information, minimizing the risk of data breaches.

Data Integrity and Accuracy

Ensuring the integrity and accuracy of patient data is vital. AI systems should be designed to detect and correct errors in the input data. This involves validating data entries, flagging inconsistencies, and implementing mechanisms for data correction. Maintaining the accuracy of patient information is crucial for the reliability of AI-driven diagnoses and treatment recommendations.

Bias Mitigation and Fairness

AI systems can inadvertently perpetuate biases present in the data they are trained on. These biases can lead to unfair or discriminatory outcomes in healthcare. Therefore, careful consideration must be given to the data used to train AI models and to the development of algorithms that mitigate potential biases. Regular audits to identify and address biases are critical to ensure fairness and equity in AI-driven healthcare decisions.

Accountability and Oversight

A clear framework for accountability is essential for AI systems in healthcare. This includes establishing mechanisms for identifying and addressing errors or malfunctions in AI systems, as well as procedures for handling complaints and addressing patient concerns. Clear lines of responsibility and oversight are necessary to ensure that AI systems are used ethically and effectively in healthcare settings.

Patient Consent and Control

Patient consent is critical for the ethical use of patient data in AI systems. Patients should have the right to access, correct, and delete their data. This includes giving informed consent for the use of their data in AI applications and the ability to withdraw consent at any time. Empowering patients with control over their data is crucial for maintaining their trust and autonomy in the healthcare system.