Linear Discriminant Analysis (LDA)

Understanding the Core Concept of LDA

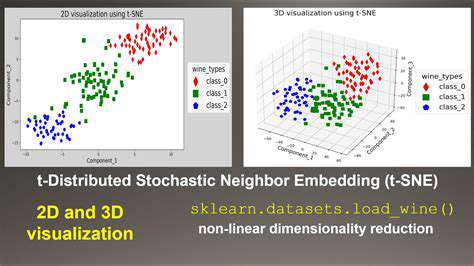

Linear Discriminant Analysis (LDA) is a dimensionality reduction technique primarily used in supervised learning, although it can be applied to unsupervised learning scenarios as well. At its core, LDA aims to find a linear transformation that maximizes the separation between different classes in a dataset. This transformation projects the data onto a lower-dimensional subspace while preserving the class separability as much as possible. It works by finding the directions in which the separation between classes is maximized, effectively reducing dimensionality while ensuring that the critical information about class distinctions is retained.

Mathematical Formulation of LDA

Mathematically, LDA seeks to find a projection matrix that maximizes the ratio of between-class scatter to within-class scatter. This involves calculating the mean vectors for each class, the within-class scatter matrix, and the between-class scatter matrix. The projection matrix is then derived from the eigenvectors corresponding to the largest eigenvalues of the generalized eigenvalue problem involving these scatter matrices. This mathematical formulation ensures the optimal linear transformation for class separation.

LDA in Unsupervised Learning

While LDA is traditionally used in supervised settings, it can be adapted for unsupervised learning by treating the clusters as different classes. In this approach, the goal is to find a lower-dimensional representation that effectively captures the underlying structure of the data, even without explicit class labels. This approach is often used in exploratory data analysis to visualize clusters and identify patterns in the data.

This unsupervised application is a way to reduce dimensionality, but it is not as effective as when class labels are available. The separation between clusters is not as clear as with labeled data, and the results may be less accurate in identifying distinct structures.

Comparison with Other Dimensionality Reduction Techniques

LDA is often compared to Principal Component Analysis (PCA), another popular dimensionality reduction technique. While PCA focuses on maximizing variance within the entire dataset, LDA prioritizes maximizing the separation between different classes. This difference in focus leads to different results, with LDA potentially producing more insightful representations when class information is available. However, if class labels are not available, PCA might be a more suitable choice.

Practical Applications of LDA

LDA has diverse applications in various fields. In image processing, it can be used to reduce the dimensionality of images while preserving important features. In text analysis, LDA can be applied to extract the most relevant topics from a collection of documents. In bioinformatics, LDA can assist in identifying different classes of genes or proteins. Its applicability is vast, extending to areas requiring efficient dimensionality reduction while maintaining crucial information about class distinctions.

Advantages and Disadvantages of LDA

LDA offers several advantages, including its ability to effectively separate different classes in a dataset. It also provides a clear mathematical framework for understanding the process. However, LDA assumes that the data within each class is normally distributed, which might not always be the case in real-world datasets. Additionally, LDA's performance can be sensitive to the presence of outliers in the data. Care must be taken to understand and mitigate these potential issues when applying LDA in real-world scenarios.